PANDORA Upgrade: Particle Dispersion in Bigger Turbulent Boxes

eCSE11-01Key Personnel

PI/Co-I: Prof. John Shrimpton (University of Southampton)

Technical: Thorsten Wittemeier (University of Southampton), Dr. David Scott (EPCC)

Relevant documents

eCSE Technical Report: PANDORA Upgrade: Particle Dispersion in Bigger Turbulent Boxes

Project summary

PANDORA is a pseudo-spectral flow solver providing solutions to the Navier-Stokes equations in a cubic domain for isotropic and homogenous turbulent flow. It also includes the tracking of a large number of point, Lagrangian particles.

A new version of PANDORA has been implemented within this eCSE project. The main aim of the project was to overcome the limitations of the previous code in terms of memory use and efficiency. Several issues prevented the use of the code for large-scale computations. First of all, a significant amount of the data stored in the old code was not necessary. The memory use of the code has been drastically reduced by keeping only those data that are strictly necessary. PANDORA 2.0 uses only about a tenth of memory for fluid simulations compared to the previous version.

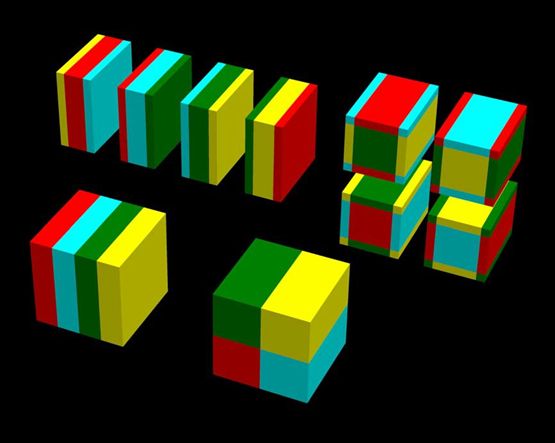

Another important change concerns the parallelisation of the code. All particles are stored on the same process as the fluid velocity field belonging to the respective part of the simulation domain. In order to compute the velocity of the particles, an interpolation of the fluid velocity is needed. For particles near the boundary of a subdomain, information from the neighbouring process (halo nodes) is needed. By changing the parallelisation from a one-dimensional to a two-dimensional decomposition a significant reduction in memory use could be achieved.

So far the code has been successfully tested on simulation grids up to 81923. The strong scalability of simulations with particles has proven to be nearly ideal. Fluid simulations show reasonably good scaling behaviour within certain limits, which allow for a good performance on all domain sizes. The use of PETSc for implementing the parallel arrays as well as parallel file reading and writing ensures that future extensions are easier to implement.

The new code gives access to simulations with large numbers of particles at high Reynolds numbers even where only limited resources are available. Levels of turbulence that have not been studied with particles are now accessible. Currently the code is being extended to allow for the study of particles in homogeneous shear flows. For the future it is also planned to implement channel flow simulations.

Figure1: By changing the parallelisation from a one-dimensional to a two-dimensional decomposition, as illustrated in the picture, a significant reduction in memory use could be achieved.

Achievement of objectives

A completely new version of PANDORA was implemented. The main objectives were as follows:

- Reimplement the PANDORA code in modern Fortran and change from the slab (1D) decomposition to a more flexible pencil (2D) decomposition. Success metrics were to demonstrate the correctness of the code as well as its scalability with and without particles.

- Based on simulations of forced isotropic turbulence, we compared the dissipation constant of PANDORA with published results and found a good agreement with both the old code and published results.

- Fluid simulations with the new code show a good speed-up initially, but eventually the speed-up stagnates.

- For simulations with particles, which are the main application of PANDORA, the new code shows near-linear scaling.

- Reduce memory use to enable the code to run bigger simulations for the same hardware resources.

- Several test cases served as success metrics. These were simulations on a 40963 grid using 128 ARCHER nodes with memory use not beyond 54 GB/node, a 20483 grid using 64 ARCHER nodes with memory use not beyond 14 GB/node and a 10243 grid using 32 ARCHER nodes with memory use not beyond 3.5 GB/node. A significantly lower memory use was achieved with 23.8 GB, 6.3 GB and 1.9 GB respectively. The smallest of these cases is the largest still possible with the old code, which uses about 10 times more memory.

- We expected that a fluid simulation on an 80963 grid could be run on 650-1280 ARCHER nodes. This is on a similar order as what has been used for the few published simulations at this size. Memory savings allowed us to test this simulation grid on only 512 ARCHER nodes or 12288 cores, using 48 GB of memory per node. The tests performed within the eCSE project demonstrate that the new code is appropriate for simulations at very competitive sizes making only moderate use of computing resources.

- A further success metric was a 20483 grid testcase with one particle per control volume (a total of 8,589,934,592 particles) with a memory use of no more than 25 GB per node. This target was not entirely achieved. This is due to the fact that, as opposed to the original planning, it was decided to use three-dimensional real-space velocity arrays for the interpolation of fluid velocities at the particle position. The original idea to use ghost particles rather than fluid halos turned out to be too difficult to implement. It also needs to be emphasised that for many particles (more than one per control volume) fluid halos are more memory saving.

- Reimplement the parallel restart file routines to improve efficiency, reduce file size and allow for more data formats.

Using the 2/3 rule, the size of the fluid restart files have been reduced to about 8/27 compared to the current file size.- The restart files are implemented in the MPI-IO format as this is the fastest option. However the implementation was done using PETSc routines, allowing for an easy addition of further formats with minimal changes, in particular VTK and HDF5.

- The overall speed of the I/O operation has been improved roughly proportional to the reduction in file size.

- Make future extensions of the code easier.

- The parallelisation using PETSc arrays allows for an easy implementation of additional arrays.

- The routines for the Fourier transforms and global transforms have been implemented as subroutines in a dedicated module. Reuse of these subroutines or exchanging the FFTW library for another FFT implementation has been made easier.

- Use of the Fortran 2003/2008 and C data types makes interoperability with C/C++ functions significantly easier.

- PETSc provides access to a wide range of linear algebra routines and solvers.

- Code documentation using Doxygen makes the code easier to understand.

Summary of the Software

PANDORA 2.0 is a pseudospectral DNS solver for homogeneous isotropic turbulence with passive and inertial Lagrangian particles. It also includes code for the simulation of homogeneous shear flows.

The source code is available under a BSD license on the repository https://bitbucket.org/pandoradns/

The source code includes scripts and Makefiles for the installation on ARCHER. It depends on the libraries PETSc and FFTW and also makes use of environment modules.

Scientific Benefits

The new code can be used to perform simulations on bigger computing grids, enabling us to study turbulent flows at higher Reynolds number. Currently an extension towards other types of homogeneous turbulence, in particular shear flow, is underway. It is also expected that the code will be used for simulations of two-way coupled flows.