A fully Lagrangian dynamical core for the Met Office NERC Cloud Model

eCSE12-10Key Personnel

PI/Co-I: David Dritschel (University of St Andrews, PI), Nick Brown (EPCC, Co-I), Michèle Weiland (EPCC, Co-I), Doug Parker (University of Leeds, Co-I), Alan Blyth (University of Leeds, Co-I)

Technical: Gordon Gibb (EPCC), Steven Böing (University of Leeds)

Relevant documents

eCSE Technical Report: A fully Lagrangian dynamical core for the Met Office NERC Cloud Model

Project summary

The turbulent behaviour of clouds is responsible for many of the uncertainties in weather and climate prediction. Weather and climate models fail to resolve the details of the interactions between clouds and their environment and suffer from a crude representation of microphysical processes, such as rain and snow formation. These processes can be studied in high-resolution "Large Eddy Simulations" (LES) (using a grid spacing below 100 metres) where the interaction between clouds and the environment is approximately resolved.

However, even at these resolutions (which are not affordable in global weather and climate models) LES still suffer from substantial sensitivity to numerical mixing [1,2,3]. This is due to the highly non-linear nature of the dynamics and thermodynamics of clouds.

To overcome these difficulties, we have recently developed a prototype new method called "Moist Parcel-In-Cell" (MPIC) which deals with the dynamics of clouds in an essentially Lagrangian framework, ie by directly following parcels of fluid rather than representing quantities on a grid [4,5]. The Lagrangian framework avoids serious discretisation errors arising from nonlinear "advection" or transport.

Quantities which should remain unchanged following fluid parcels are incorrectly treated, resulting in the improper treatment of many important processes. In contrast, MPIC preserves such quantities and treats advection in a natural, simple and much more accurate way.

MPIC represents both dynamics and processes explicitly using Lagrangian parcels that carry volume, circulation and thermodynamic properties (eg potential temperature and moisture content). This approach largely overcomes problems due to numerical mixing at small scales that are inherent to Eulerian methods, and could be applied to a wide range of geoscientific problems.

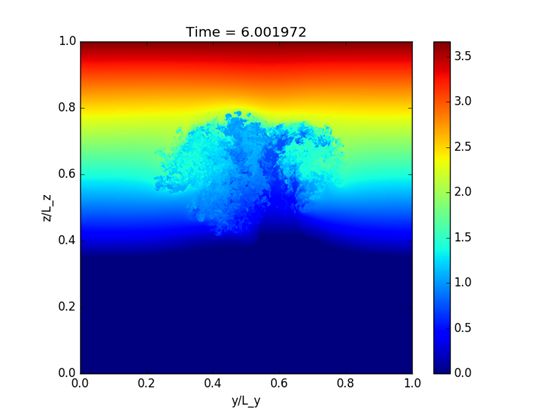

The purpose of the eCSE project was to port the original code, which had limited parallelism, to modern massively-parallel computer architectures, enabling much larger problems to be studied in unprecedented detail (see for example figure 1). We also aimed to make MPIC easily usable by the UK weather and climate communities, specifically the large community of researchers involved in the NERC/Met Office ParaCon programme and specifically the Met Office convection division led by Dr Alison Stirling. To this end we have adopted and built upon the existing Met Office NERC cloud model (MONC) framework. MONC is an LES research model that not only exhibits good parallel performance and scalability to over 32768 cores on ARCHER massively-parallel system, but also performs in-situ data analytics and fast input/output.

We achieved our primary objective by using much of the existing parallel and solver functionality in MONC while replacing the LES "dynamical core" with the Lagrangian approach of MPIC. This has made it straightforward for members of the MONC community to pick up and take advantage of our work. We believe this will enable a step change in the ability to model the effects of clouds, currently the greatest uncertainty in weather and climate prediction. The MONC community is the first worldwide to have an atmospheric model based on this novel methodology. The MPIC method is efficient as the operations that are performed at the parcel level are simple. Our first studies, where both models are compared with simplified moist thermodynamics, show that MPIC results generally compare well to the Eulerian core of MONC. However, MPIC represents the mixing process in a much more physically plausible way as the stretching, splitting and merging of parcels, and provides a higher effective resolution with the same grid spacing.

MPIC would be particularly suitable for future applications that involve the transport of many different constituents such as aerosols and microphysical droplet size distributions. This is because advection or transport of any quantity in MPIC is simply a change in the position of three parcel coordinates, rather than an operation that is performed for each constituent separately. The computational cost of advection (besides MPI communication) is therefore largely independent of the number of constituents.

[1] Lasher-Trapp, S. G., et al. "Broadening of droplet size distributions from entrainment and mixing in a cumulus cloud." Quarterly Journal of the Royal Meteorological Society 131.605 (2005): 195-220.

[2] Matheou, G., et al. "On the fidelity of large-eddy simulation of shallow precipitating cumulus convection." Monthly Weather Review 139.9 (2011): 2918-2939.

[3] Seifert, A., and T. Heus. "Large-eddy simulation of organized precipitating trade wind cumulus clouds." Atmospheric Chemistry and Physics 13.11 (2013): 5631.

[4] Dritschel, D.G. et al "The Moist Parcel-In-Cell method for modelling moist convection. Part I: the numerical algorithm." Quarterly Journal of the Royal Meteorological Society 144 (2018): 1695-1718.

[5] Boeing, S.J., Dritschel, D.G., Parker, D.J. and Blyth, A.M. "Comparison of the moist parcel-in-cell (MPIC) model with large-eddy simulation for a cloud." Quarterly Journal of the Royal Meteorological Society 144 (2019): in press.

Achievement of objectives

The overall aim of the eCSE project was to parallelise the Lagrangian 'Moist Parcel in Cell' (MPIC) code by incorporating it into the 'Met Office NERC Cloud Model' (MONC) framework. MPIC was parallelised only by OpenMP and as such could not scale beyond one node and its maximum problem size was limited by memory and time constraints. MONC has MPI parallelisation and is known to scale to 30,000 cores. Both codes have similar functionality so it was a logical decision to combine the two codes. We called the finalised code the 'Parallel Moist Parcel in Cell" or PMPIC.

There were four main objectives for the project:

Objective 1: Introduce the Lagrangian dynamical core into the MONC Framework.

Description: Implement MPIC functionality into MONC, allowing it to benefit from the MPI parallelisation of MONC.

Metric: Support simulations with at least 8643 grid points, each corresponding to 8-100 fluid parcels, using the case described in the draft publication on MPIC. These runs should achieve a parallel efficiency of at least 0.7 on 100 nodes or greater.

Comments: Because of memory requirements, at a gridsize of 8643 we needed to use a minimum of 216 nodes to run the simulation to the end. Using this as a baseline we achieved a parallel efficiency of greater than 70% up to 864 nodes (20,000 cores).

Objective 2: Maintain existing OpenMP in conjunction with MPI for the dynamical core.

Description: Whilst implementing MPIC into MONC, parallelise it with OpenMP so that MONC can be used as a mixed-mode MPI/OpenMP code.

Metric: Obtain less than 25% performance loss in synchronisation/idle threads on 12 cores per MPI process on ARCHER.

Comments: OpenMP Performance was found to be poor, achieving a speedup of only a factor of 3 for 12 OpenMP threads (compared to 1 thread). As such we did not meet this metric. We note that for very large MPI process counts (where the FFT solver begins to scale poorly) using OpenMP threads can mitigate the effects of this poor scaling. We therefore did not meet this success metric.

Objective 3: Introduce parcel-based I/O in the MONC framework.

Description: Implement parcel-writing functionality as well as parallel I/O into MONC, and allow MONC's I/O server to be parcel-aware so it can carry out in-situ analysis/reduction on parcel quantities.

Metric: Keep the overhead of parallel I/O and data analytics below 15% whilst providing all diagnostic values currently available in the prototype MPIC.

Comments: We found that parcel variables took up essentially all the memory on nodes, so having I/O servers holding copies of all the parcel data would require half of all the node memory, doubling the minimum node-count for simulations. As such we decided not to implement parcels into the I/O server. We instead focused on writing simple parcel write routines, parallel output of gridded fields and parcel data in NetCDF format, and some python analysis scripts to read the data in and visualise it. This objective is therefore incomplete, and the success metric as originally formulated has not been met.

Objective 4: Modernise the code base of MPIC

Description: Update the MPIC code to use dynamically allocatable arrays and derived datatypes.

Metric: Metric: Retain over 90% of current performance using dynamic allocation and derived types.

Comments: It was difficult to isolate and quantify the effects of the refactoring as we completely re-wrote MPIC. But considering single-core performance, MPIC originally achieved 353,000 parcel operations per second, whilst PMPIC was able to achieve 563,000 parcel operations per second, a performance improvement of 55%. We therefore achieved our metric and in fact obtained a significant performance increase. This eCSE project has allowed PMPIC to scale to many thousands of MPI processes, permitting much larger simulations to be carried out than was originally possible with MPIC. Furthermore, modernisations to the codebase of MPIC achieved a single core speedup of 55%.

Summary of the Software

The PMPIC code is publicly available on the ARCHER github website (https://github.com/EPCCed/pmpic) under a BSD 3-clause licence. The github site contains further information on the compilation of the code. We further plan to release the code as a module in ARCHER and - once we have more experience with it - provide a version on the Met Office code repository (this version might not be as up-to-date as the git repository).

A makefile is used to compile the code. During compilation, the inclusion of NetCDF output components is fully optional (this is because NetCDF output requires a specific version of the NetCDF libraries, which includes the fortran bindings and support for parallel NetCDF). Third party software requirements are:

- FFTW (Tested with 3.3.4.11)

- NetCDF : tested with netcdf-4.6.1 and netcdf-fortran-4.4.4 libraries, compiled with zlib-1.2.11 and hdf5-1.10.4.

- MPI: tested with cray-mpich/7.5.5

NetCDF downloads: https://www.unidata.ucar.edu/downloads/netcdf/index.jsp

We have tested PMPIC with the GNU compiler. The component-based architecture makes it easy to add or remove functionality, and components can be assigned to groups. Besides initialisation, timestep and finalisation groups, we have added a rk4 (runge-kutta4) group. This will make it easy in future to change the time-stepping to a low-storage Runge-Kutta scheme.

The key new development to the software that increases its intrinsic value is the MPI-based parallelism. This means the model will be a feasible alternative to LES or DNS for many applications. It also means the code can be run on a much larger variety of machines.

Scientific Benefits

The new code will allow MPIC to be used for several applications that would have not been possible previously.

- Atmospheric chemistry and detailed cloud physics. Simulations where parcels carry many chemical and microphysical properties (e.g. in a bin microphysics approach) have a large memory footprint. Without distributed parallelism, such simulations would only be possible on very small domains, and therefore not competitive with Eulerian models. With PMPIC, such simulations will be possible on large domains, and take a reasonable amount of time to complete.

- Atmospheric dynamics problems that require a large-domain and/or a high resolution. Here, one can think of simulations of ensembles of clouds that resolve the microphysical processes (e.g. evaporation) that take place at the edge of a cloud. One of our future goals is to use the model to study self-aggregation of convection on domains with horizontal dimensions of 100s of kilometres, as well as the coupling between convection and a circulation driven by SST gradients. A grant proposal that aims to fund this work is near completion.

- The uptake of PMPIC for a wider range of problems. These include detailed modelling of the ocean mixed layer, entrainment in gravity currents, and low-Prandtl number flows in astrophysics. We are already working on the latter application with Prof. Steve Tobias at the University of Leeds. The parallel and modular nature of PMPIC makes the model competitive and easier to adapt to such problems.

- Since the code base is relatively small, it can be used to test development in computational and numerical methods for particle/parcel-based codes in a more general context. This could for example concern new developments in the communication between processors and further changes to the solver (where we are aiming to reduce the number of FFTs).

Figure 1: A cross-section of total effective buoyancy (including latent heating by condensation) in an MPIC simulation of a rising thermal into a stably stratified atmosphere. The blue regions are relatively dense and less buoyant. Many fine-scale features can be made out, and these are strikingly similar to features found in real clouds. The simulation was performed using 864 cubed underlying gridcells, and was made possible by the work carried out in this eCSE project.